This video contains proprietary information and cannot be shared publicly at this time.

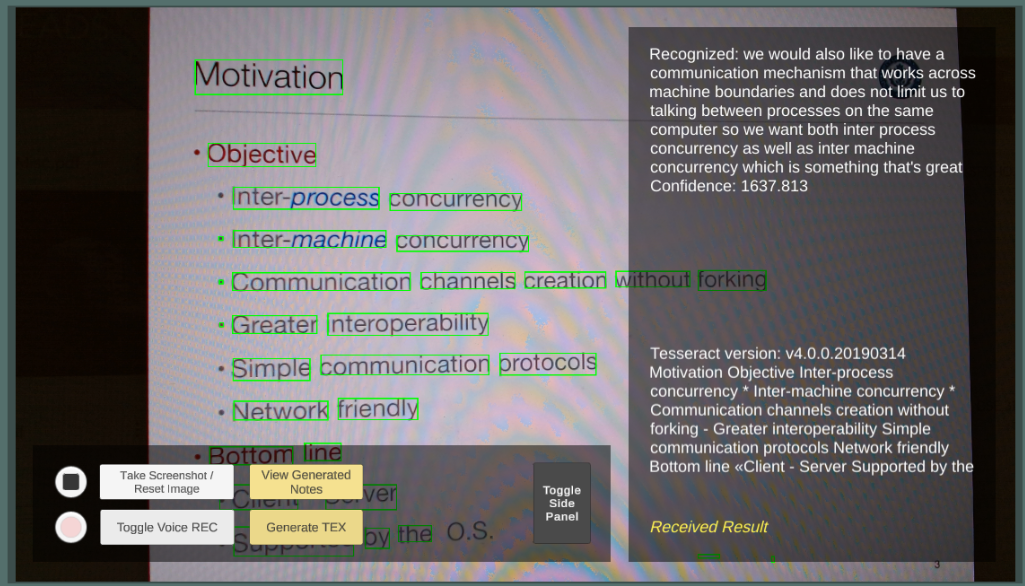

Figure 1

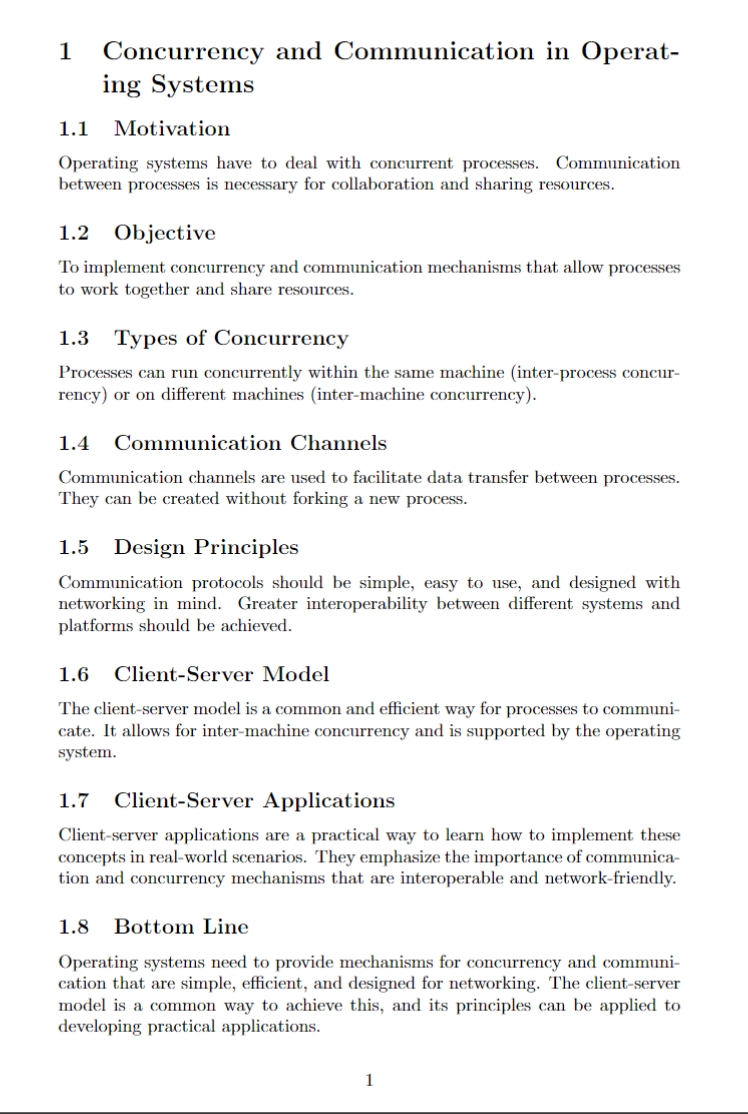

Figure 2

Team 7

Team Members |

Faculty Advisor |

Teagan D'addeo |

Fei Miao Sponsor Other |

sponsored by

Student Sponsored

ARcademic

The ARcademic project – an integrated augmented reality (AR) application for both Windows and Android – is designed to assist and automate students’ capabilities in the classroom by allowing them to organize discussion and lecture content via their mere presence. The application uses audio and video information in tandem to recognize content in real time and parse the session into accurate and professionally formatted notes that can then be easily viewed or exported in LaTeX format. For optical character recognition, we used the Tesseract API to extract words and characters from an image captured by a phone camera or webcam and pass them to our post processing model. To simultaneously interpret the speech of lecturers, we implemented a Vosk dynamical model which runs offline and has been optimized both to take up little space on the device and process data quickly, even without powerful computers optimized for AI. With the help of a Kaldi framework and an array of finite state transforms (FSTs), ARcademic includes a system to declare your subject or environment for excellent speech recognition in even the most jargony courses. Our application is further powered by GPT-3.5, which enables our central notes compilation feature, so that students may always be assured of clean output.